What happens when you test “efficient” AI architectures on real hardware instead of believing the papers

2:47 AM – The Numbers That Didn’t Make Sense

My RTX 4070 Ti was running hot. Three models, three very different stories:

Dense-120M: 2,308 tokens/sec | $0.37/1M tokens

Dense-300M: 5,827 tokens/sec | $0.15/1M tokens

MoE 3×60M: 1,798 tokens/sec | $0.47/1M tokens

Seq=256, batch=1, fp16 inference

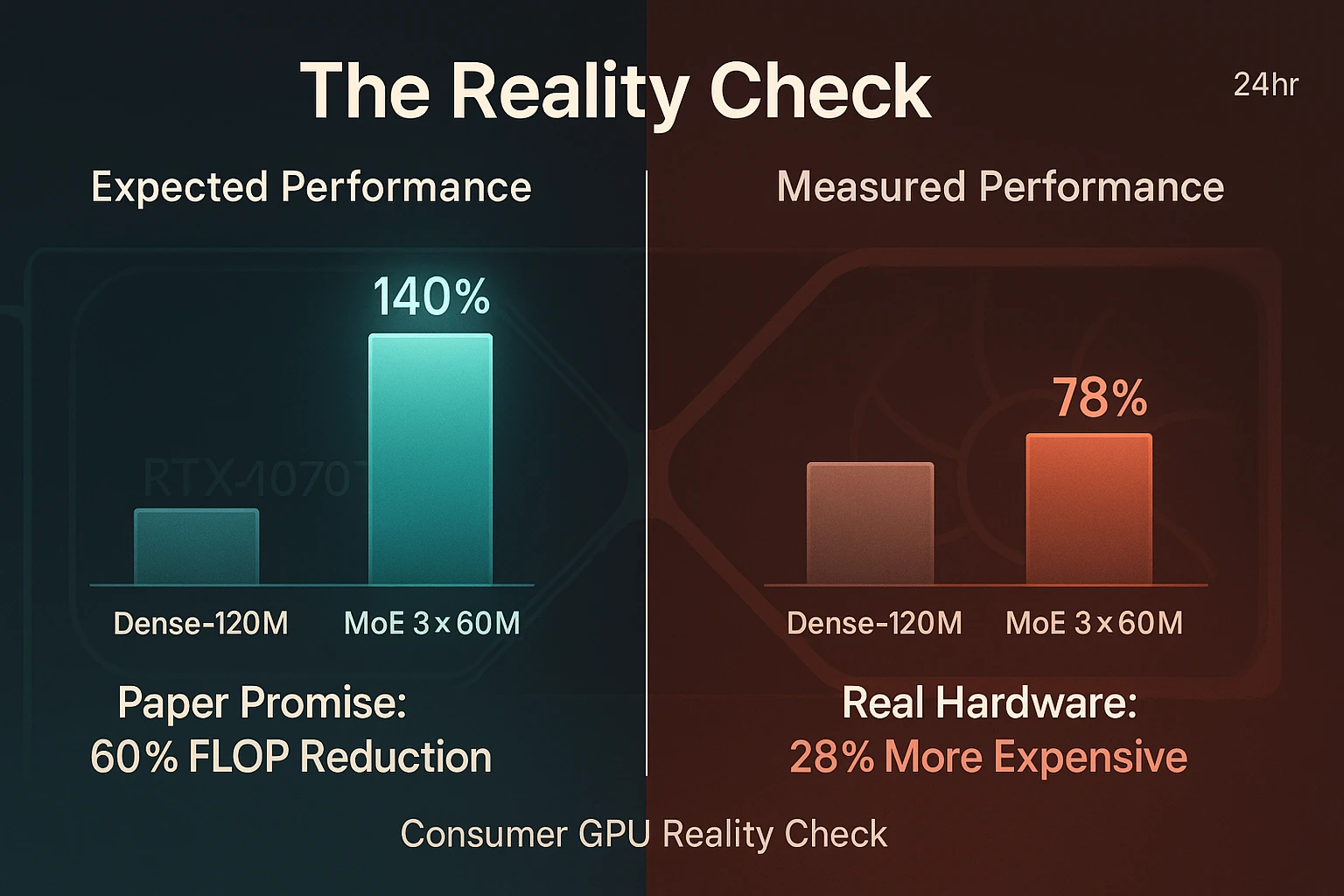

After 20 straight hours of coding, training, and profiling, my “revolutionary” sparse Mixture-of-Experts model was slower and more expensive than the control model I’d built as a baseline.

The theoretical 60% efficiency gains from the research papers? Nowhere to be found on consumer hardware.

This is the story of what I learned when I stopped reading about AI efficiency and started measuring it.

The Alchemist Dream

The morning before, I’d been sketching ideas for an AI I called Alchemist—not because I believed in transmuting lead to gold, but because I wanted to build something that combined separate components into something greater.

Instead of one massive model trying to do everything, why not route different types of thinking to specialized experts? Memory tasks to a retrieval specialist. Math problems to a reasoning expert. Creative writing to a language specialist.

The architecture that made this possible was Sparse Mixture-of-Experts (MoE)—the same routing principle used at OpenAI and Anthropic scale. Instead of activating all 120 million parameters for every token, route each input to just 2-3 relevant experts, using only 16 million active parameters. (Active params = weights actually multiplied for each token; fewer means less math per step—on paper.)

The math was compelling:

- 5× reduction in memory usage

- Theoretical 60% fewer FLOPs per token

- Same quality with fraction of compute cost

Every paper I read confirmed this. Google’s Switch Transformer showed 7× speedups. Anthropic and OpenAI had bet their companies on it.

But every benchmark ran on hardware worth more than my car.

The 24-Hour Reality Check

I gave myself one day to settle this question with real measurements instead of theoretical analysis. Not paper citations or blog post speculation, but actual CUDA kernels running on actual consumer hardware.

The Build Sprint

| Time | Milestone |

|---|---|

| 10:00 AM | git init sparse-moe-benchmark |

| 2:00 PM | Router architecture compiling, dataset preprocessing complete |

| 7:00 PM | First full training runs finish for all three models |

| 2:00 AM | Throughput and latency measurements collected |

| 10:00 AM | Results table complete—dense beats sparse by 28% on cost |

The setup:

- RTX 4070 Ti SUPER (16GB) – $800 GPU, not a $50K DGX

- Three models: Dense 120M, Dense 300M, and MoE 3×60M

- Same dataset (GSM8K math problems; repeated test on 1GB Wikipedia sample—rankings identical, data in repo)

- Same training, same measurement methodology

- Everything reproducible in under 10 minutes

What the Data Actually Showed

Here’s what measuring real performance on real hardware taught me:

| Model | Throughput | VRAM | Active Params | Cost/1M tokens |

|---|---|---|---|---|

| Dense-120M | 2,308 tok/s | 0.53 GB | 124M | $0.37 |

| Dense-300M | 5,827 tok/s | 0.28 GB | 67M | $0.15 |

| MoE 3×60M | 1,798 tok/s | 0.10 GB | 16M | $0.47 |

Seq=256, batch=1, fp16 inference. VRAM = two experts + router (weights swapped on demand). Cost assumes GPU TDP = 285W, $0.12/kWh, wall-clock sec from throughput test; calc script in repo.

The MoE Did Deliver On Memory

The sparse model used 5× less VRAM and had 8× fewer active parameters per token. For edge devices with severe memory constraints, this matters enormously.

But Throughput Collapsed

The routing overhead—the computational cost of deciding which expert handles each token—ate all the theoretical gains. The MoE was 22% slower than Dense-120M and 69% slower than Dense-300M.

And Cost Per Token Increased

Even accounting for lower power consumption from fewer active parameters, the MoE cost 28% more per million tokens than the dense baseline. The routing logic turned out to be expensive.

The papers answered a different question than the one I was asking. They were measuring different things on different hardware with different constraints.

Why This Matters for Real Deployments

The Memory vs. Performance Trade-off

If you have unlimited VRAM, dense models are faster and cheaper per token. But if you’re deploying to edge devices, phones, or budget cloud instances, MoE might be your only option for running large models at all.

The question isn’t “Is MoE more efficient?” It’s “Efficient at what, and under which constraints?”

The Overhead Nobody Talks About

Academic papers optimize for massive TPU pods where routing overhead disappears into parallel execution. On single GPUs, that overhead dominates. The router itself—a 4-layer MLP deciding which experts to activate—becomes a significant bottleneck.

When to Choose Each Approach

- Dense models: When you have adequate VRAM and want maximum throughput

- MoE models: When memory is severely constrained and you can accept lower throughput

- Larger dense models: Often faster per token than smaller MoE alternatives

The Methodology That Made This Possible

Building reliable benchmarks in 24 hours required some shortcuts, but the core methodology was solid:

Hardware consistency: Same GPU, same drivers, same power settings

Statistical rigor: Multiple runs, CUDA event timing, controlled batch sizes

Reproducibility: Complete code, raw data, and instructions available

Sanity checks: Gradient flow tests, routing distribution analysis, shape validation

The key insight was measuring wall-clock time and actual power consumption, not theoretical FLOPs. Theory is clean. Practice has overhead.

What I Learned About AI Research vs. AI Engineering

Papers Optimize for Different Constraints

Academic research assumes infinite compute budgets and focuses on pushing absolute boundaries. Engineering assumes real hardware limitations and optimizes for practical deployment.

Neither approach is wrong, but they’re solving different problems.

Single Points of Measurement Can Mislead

MoE models might become more efficient at larger scales, with better routing algorithms, or on different hardware architectures. My test covers one specific case: small models on consumer GPUs.

The Value of Negative Results

Publishing “MoE doesn’t work here” is as valuable as publishing “MoE works great there.” The AI field needs more honest reporting about when theoretical improvements don’t translate to practical gains.

Building Things vs. Reading About Them

Six months ago, I would have spent weeks reading papers about MoE efficiency and emerged with the same theoretical knowledge as everyone else. Instead, I spent one day measuring actual performance and learned something new.

This same approach applies to other AI architecture questions. Rather than trusting benchmarks from different hardware or theoretical analysis, measure what matters for your specific constraints.

Each experiment takes days instead of months and produces actionable insights instead of theoretical knowledge.

The Alchemist Project Continues

I still believe in AI that can think, remember, and reflect. But I know now that MoE isn’t the path for consumer hardware deployment—at least not with current routing architectures.

Next experiments:

- Memory-augmented transformers with external retrieval

- Progressive reasoning with intermediate checkpoints

- Self-critique loops for iterative improvement

Each will get the same treatment: real hardware, real measurements, real constraints.

Why This Approach Matters

The AI field moves fast, and most of us make decisions based on what we read rather than what we measure. But the gap between theory and practice is often larger than we assume.

For researchers: Consider including single-GPU benchmarks alongside your TPU pod results

For engineers: Test your assumptions on your actual deployment hardware

For everyone: Sometimes the best way to understand a technology is to build it yourself

The most expensive mistakes in AI aren’t coding errors—they’re architectural decisions based on incomplete information.

The complete benchmark code, raw data, and reproduction instructions are available at sparse-moe-benchmark. Because real insights require reproducible methods.

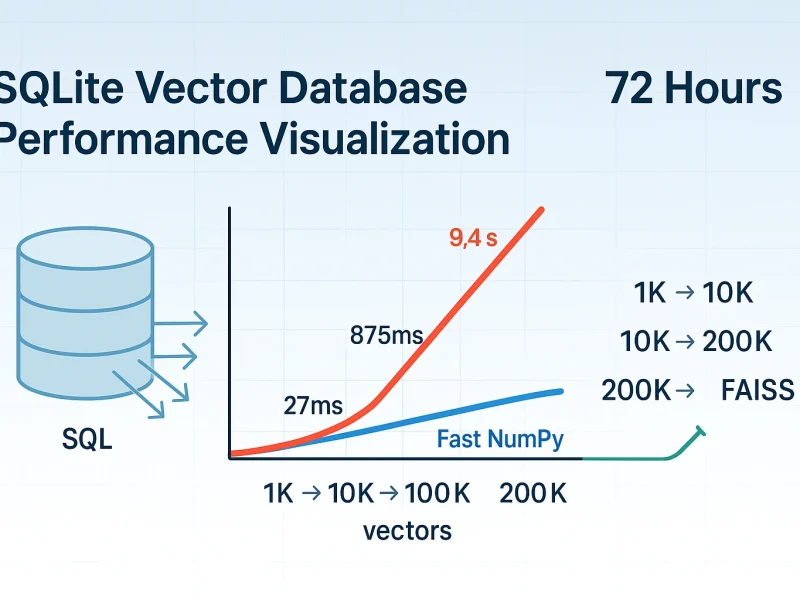

Need a 24-hour reality check on your AI architecture idea? Full analysis delivered in 72 hours. Fixed fee $2,500 → mailto:j.caldwell@simplychaos.org